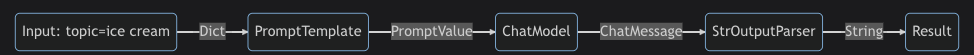

LCEL(LangChain Expression Language)

- 一个最简单示例

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

prompt = ChatPromptTemplate.from_template("给我讲一个关于 {topic}的笑话")

model = ChatOpenAI(model="gpt-4")

output_parser = StrOutputParser()

chain = prompt | model | output_parser

chain.invoke({"topic": "冰激凌"})

Prompt

prompt_value = prompt.invoke({"topic": "刺猬"})

prompt_value

# ChatPromptValue(messages=[HumanMessage(content='给我讲一个关于 刺猬的笑话')])

prompt_value.to_messages()

# [HumanMessage(content='给我讲一个关于 刺猬的笑话')]

prompt_value.to_string()

# 'Human: 给我讲一个关于 刺猬的笑话'

model

message = model.invoke(prompt_value)

message

# AIMessage(content='一只兔子和一只刺猬赛跑,刺猬以微弱的优势赢了。\n\n兔子很不服气,说:“你只是运气好,我一定能赢你。”\n\n刺猬笑了笑,说:“好啊,那我们再比一次。”\n\n兔子问:“你为什么笑?”\n\n刺猬回答:“因为我知道,无论我跑多慢,你都会在我背后。” \n\n兔子:“为什么?”\n\n刺猬:“因为你不敢超过我,怕被我刺到。”', response_metadata={'finish_reason': 'stop', 'logprobs': None})

使用llm的区别

from langchain_openai.llms import OpenAI

llm = OpenAI(model="gpt-3.5-turbo-instruct")

llm.invoke(prompt_value)

# '\n\nRobot: 为什么刺猬的生日派对总是很失败?因为他们总是把蜡烛都弄灭了!'

Output parser

output_parser.invoke(message)

#'一只兔子和一只刺猬赛跑,刺猬以微弱的优势赢了。\n\n兔子很不服气,说:“你只是运气好,我一定能赢你。”\n\n刺猬笑了笑,说:“好啊,那我们再比一次。”\n\n兔子问:“你为什么笑?”\n\n刺猬回答:“因为我知道,无论我跑多慢,你都会在我背后。” \n\n兔子:“为什么?”\n\n刺猬:“因为你不敢超过我,怕被我刺到。”'

RAG Search Exampl

- 建立向量数据

- 使用RAG增强

from operator import itemgetter

from langchain_community.vectorstores import FAISS

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.runnables import RunnableLambda, RunnablePassthrough

from langchain_openai import ChatOpenAI, OpenAIEmbeddings

vectorstore = FAISS.from_texts(

["harrison worked at kensho"], embedding=OpenAIEmbeddings()

)

retriever = vectorstore.as_retriever()

template = """Answer the question based only on the following context:

{context}

Question: {question}

"""

prompt = ChatPromptTemplate.from_template(template)

model = ChatOpenAI()

chain = (

{"context": retriever, "question": RunnablePassthrough()}

| prompt

| model

| StrOutputParser()

)

chain.invoke("where did harrison work?")

自定义也非常简单

template = """Answer the question based only on the following context:

{context}

Question: {question}

Answer in the following language: {language}

"""

prompt = ChatPromptTemplate.from_template(template)

chain = (

{

"context": itemgetter("question") | retriever,

"question": itemgetter("question"),

"language": itemgetter("language"),

}

| prompt

| model

| StrOutputParser()

)

chain.invoke({"question": "where did harrison work", "language": "chinese"})